From Zero to Generative

IAIFI Fellow, MIT

Carolina Cuesta-Lazaro

Art: "The art of painting" by Johannes Vermeer

Learning Generative Modelling from scratch

["Genie 2: A large-scale foundation model" Parker-Holder et al]

["Generative AI for designing and validating easily synthesizable and structurally novel antibiotics" Swanson et al]

Probabilistic ML has made high dimensional inference tractable

1024x1024xTime

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

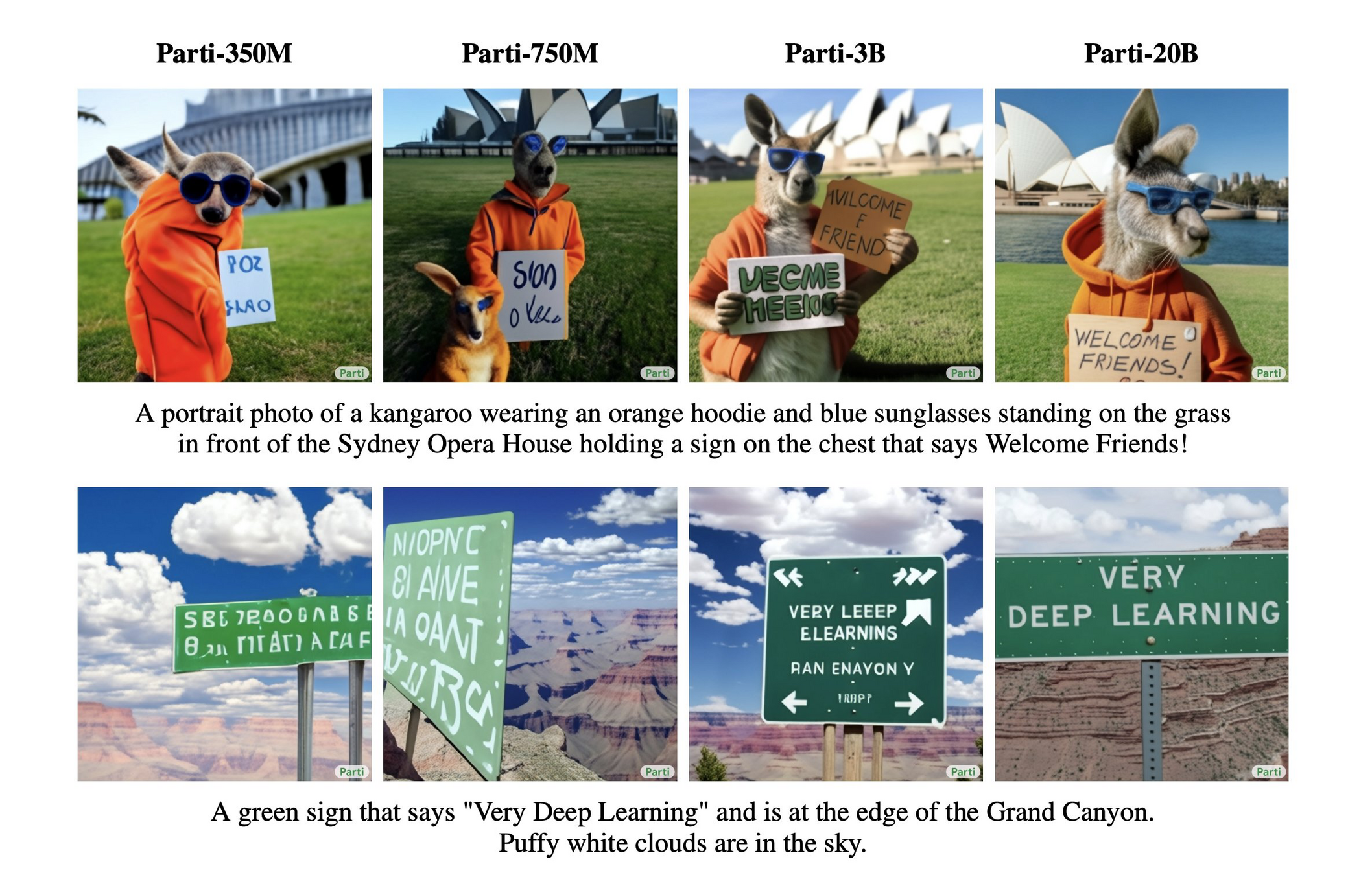

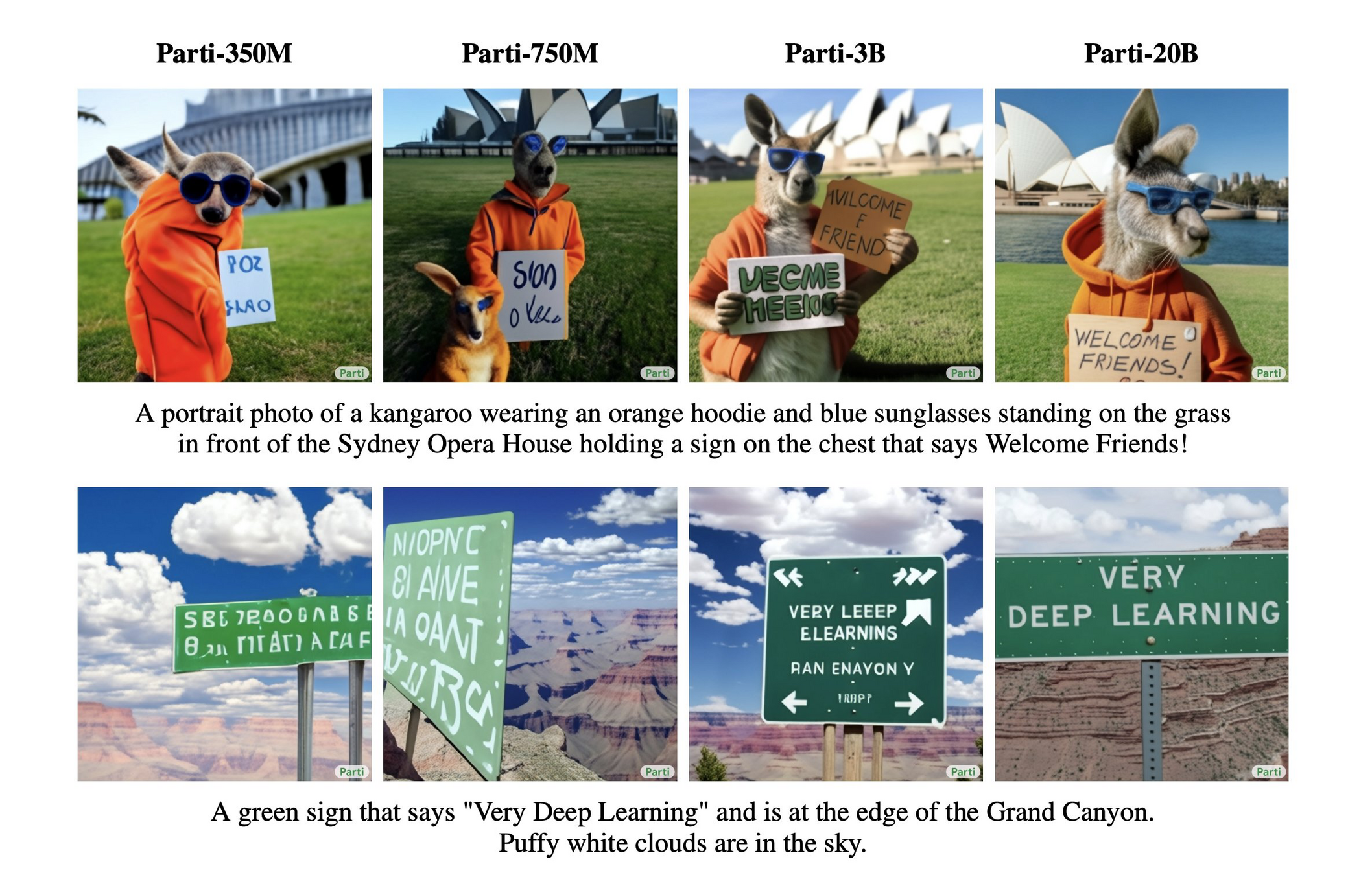

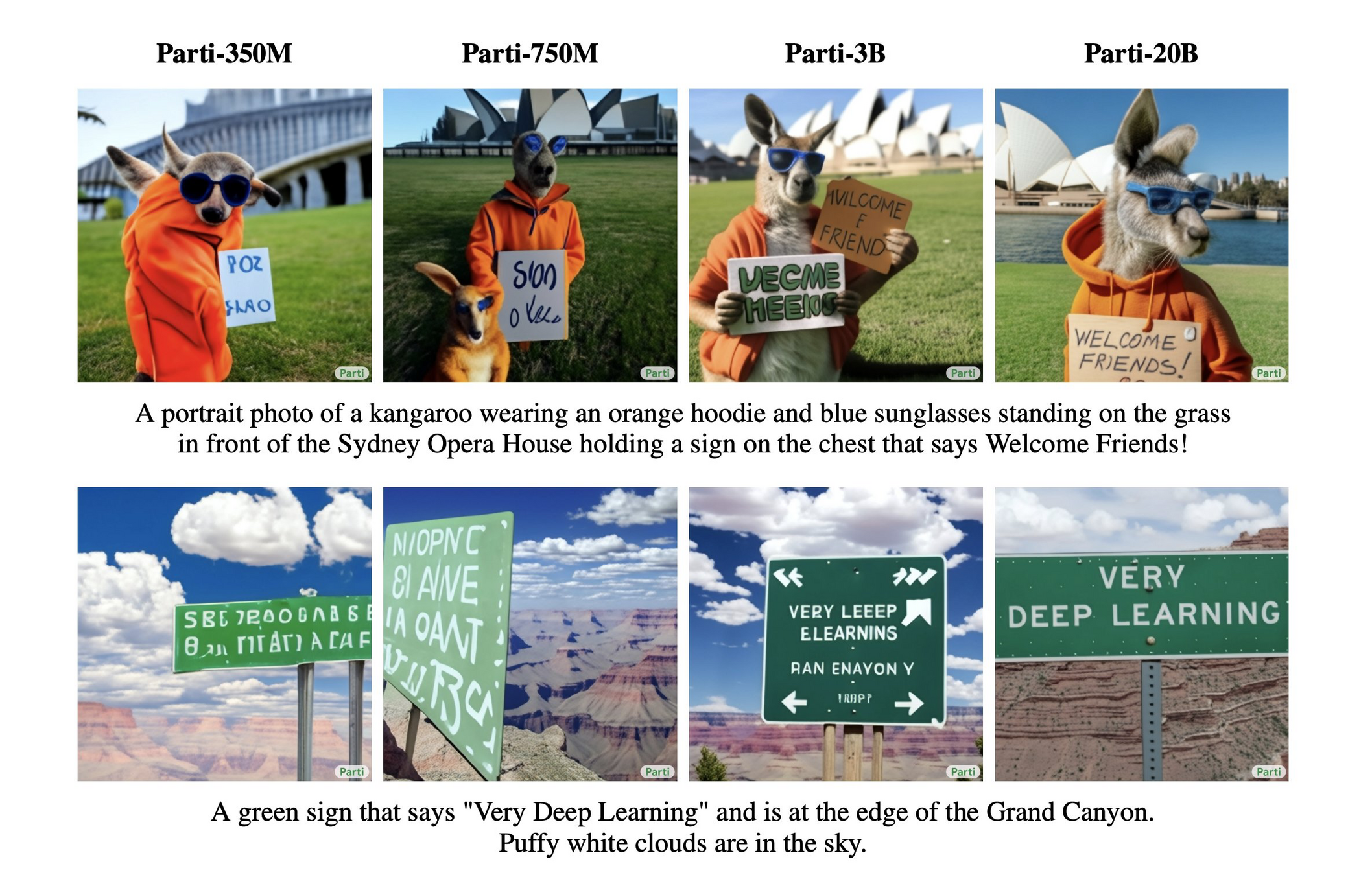

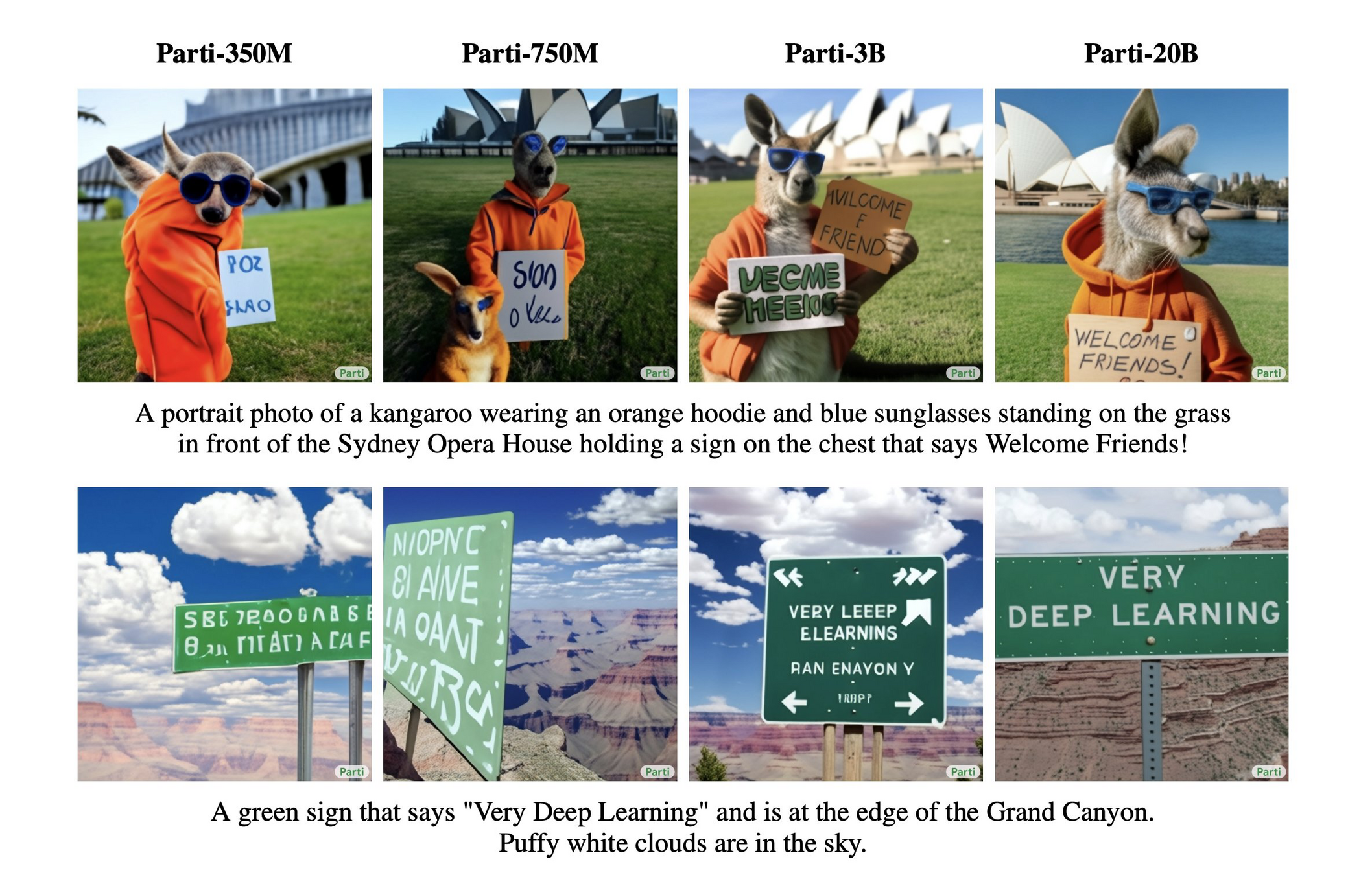

https://parti.research.google

A portrait photo of a kangaroo wearing an orange hoodie and blue sunglasses standing on the grass in front of the Sydney Opera House holding a sign on the chest that says Welcome Friends!

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

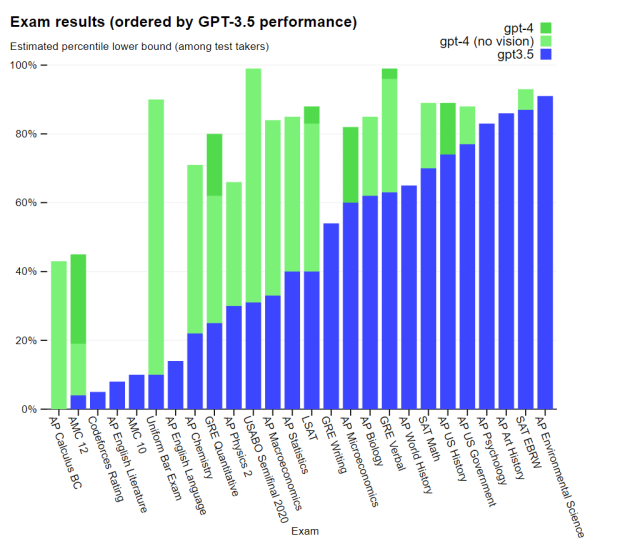

BEFORE

Artificial General Intelligence?

AFTER

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

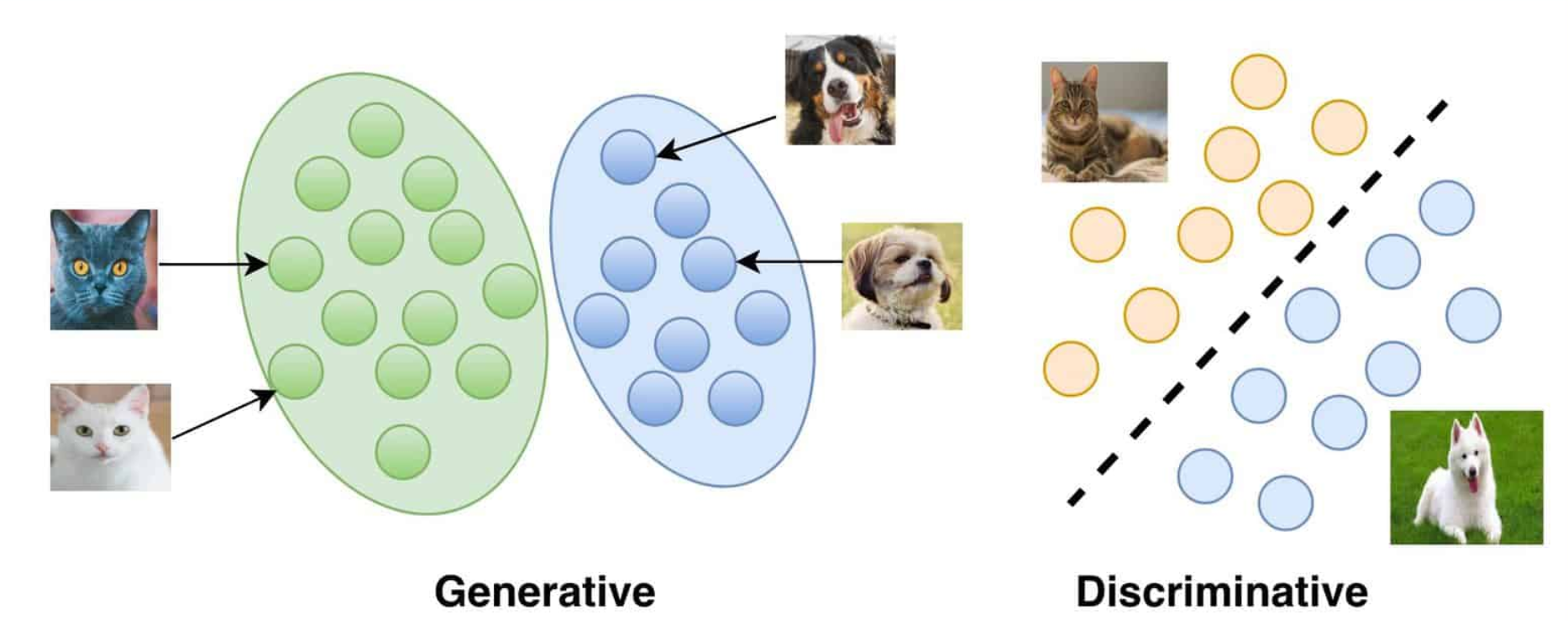

Generation vs Discrimination

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Data

A PDF that we can optimize

Maximize the likelihood of the data

Generative Models

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Generative Models 101

Maximize the likelihood of the training samples

Parametric Model

Training Samples

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Trained Model

Evaluate probabilities

Low Probability

High Probability

Generate Novel Samples

Simulator

Generative Model

Fast emulators

Testing Theories

Generative Model

Simulator

Generative Models: Simulate and Analyze

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

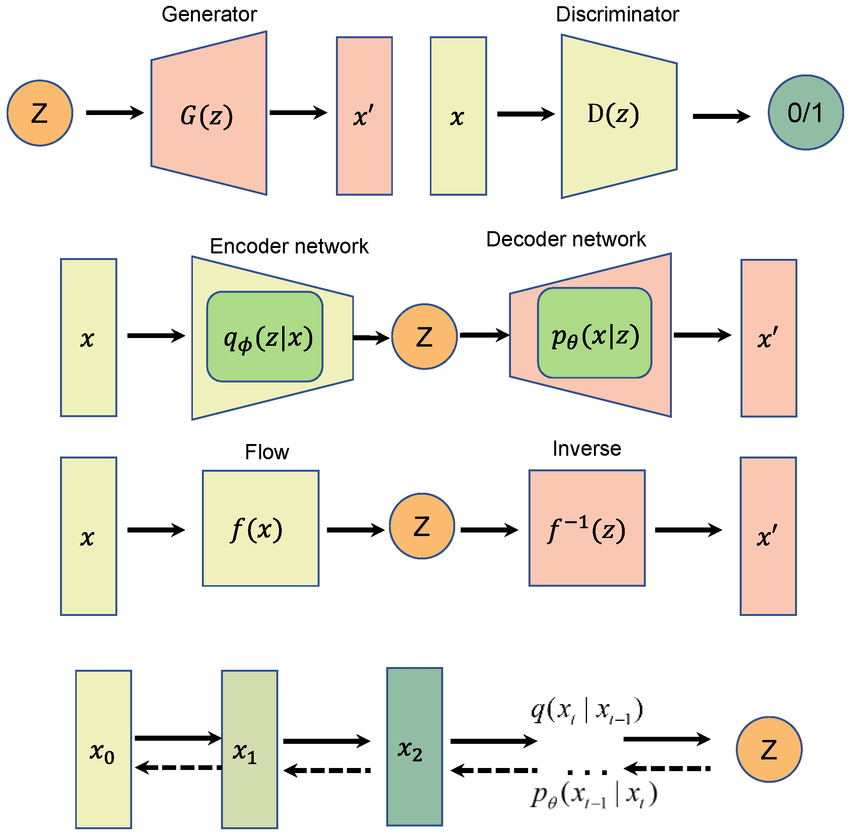

The Generative Zoo

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

GANS

VAEs

Normalizing

Flows

Diffusion Models

[Image Credit: https://lilianweng.github.io/posts/2018-10-13-flow-models/]

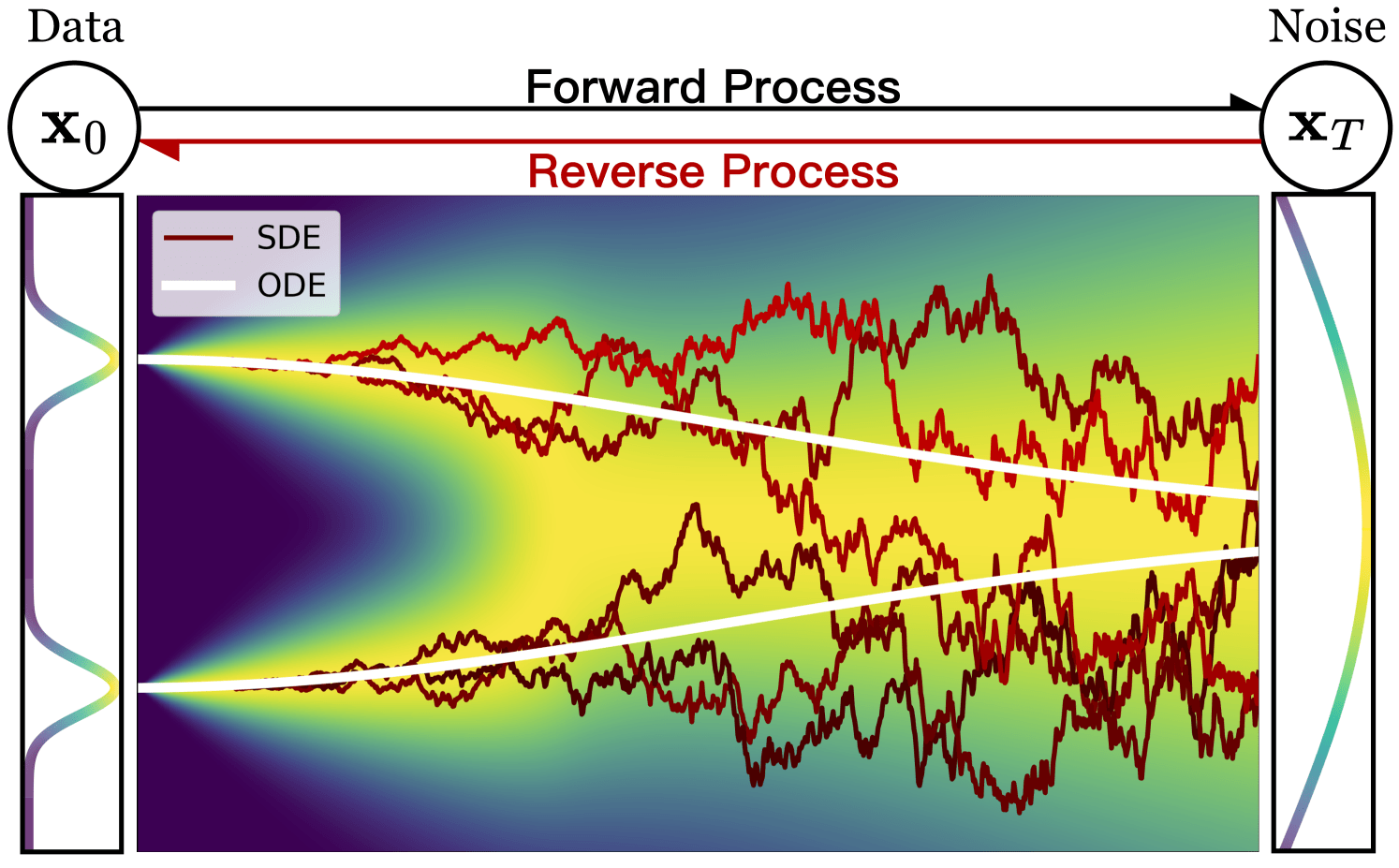

Bridging two distributions

Base

Data

How is the bridge constrained?

Normalizing flows: Reverse = Forward inverse

Diffusion: Forward = Gaussian noising

Flow Matching: Forward = Interpolant

is p(x0) restricted?

Diffusion: p(x0) is Gaussian

Normalising flows: p(x0) can be evaluated

Is bridge stochastic (SDE) or deterministic (ODE)?

Diffusion: Stochastic (SDE)

Normalising flows: Deterministic (ODE)

(Exact likelihood evaluation)

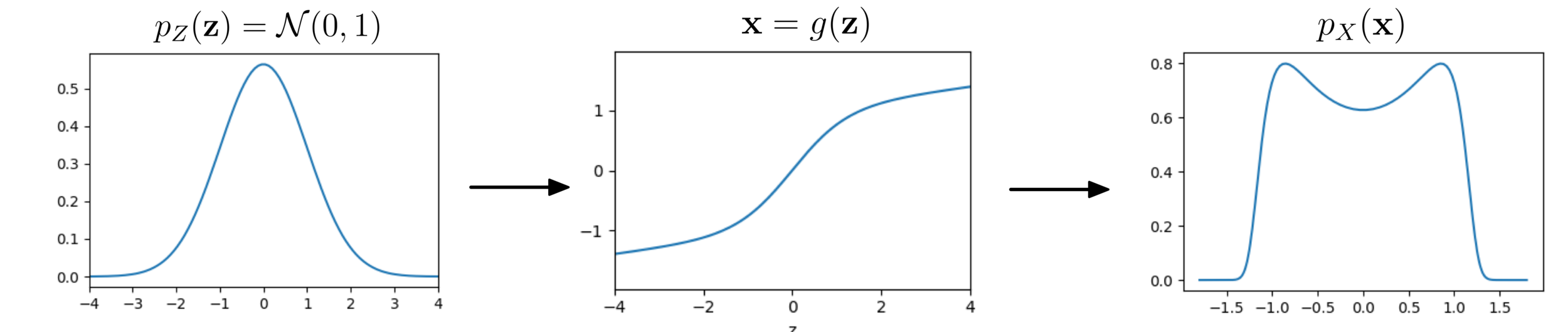

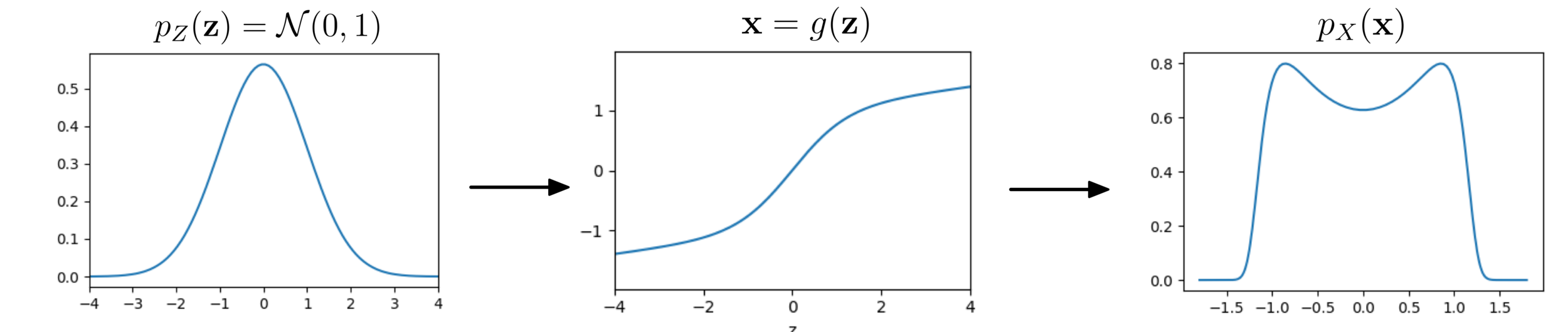

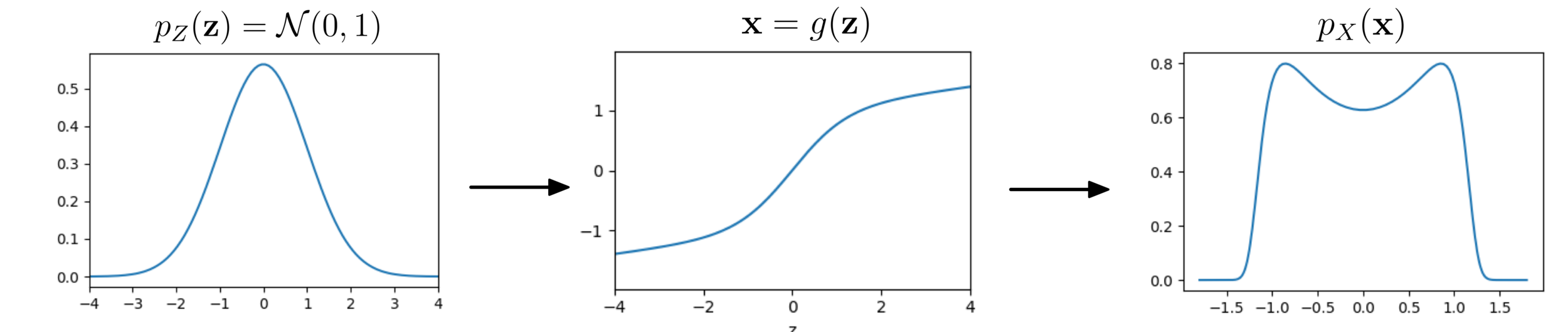

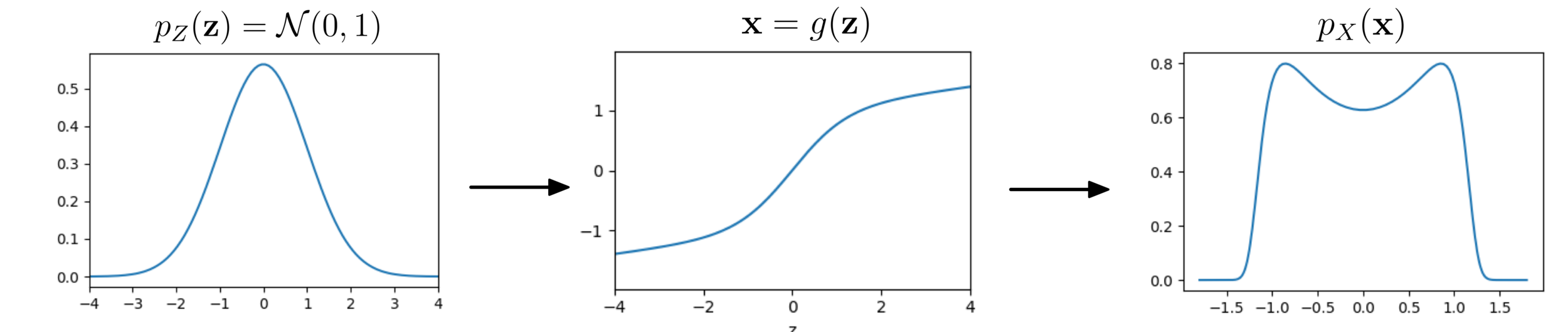

Change of variables

sampled from a Gaussian distribution with mean 0 and variance 1

How is

distributed?

Base distribution

Target distribution

Invertible transformation

Normalizing flows

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Box-Muller transform

Normalizing flows in 1934

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Normalizing flows

[Image Credit: "Understanding Deep Learning" Simon J.D. Prince]

Bijective

Sample

Evaluate probabilities

Probability mass conserved locally

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Image Credit: "Understanding Deep Learning" Simon J.D. Prince

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Invertible functions aren't that common!

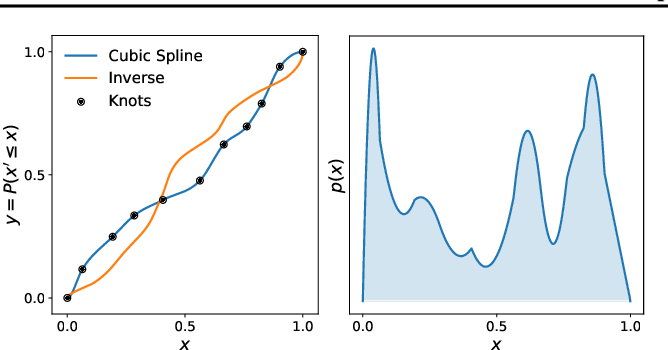

Splines

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Issues NFs: Lack of flexibility

- Invertible functions

- Tractable Jacobians

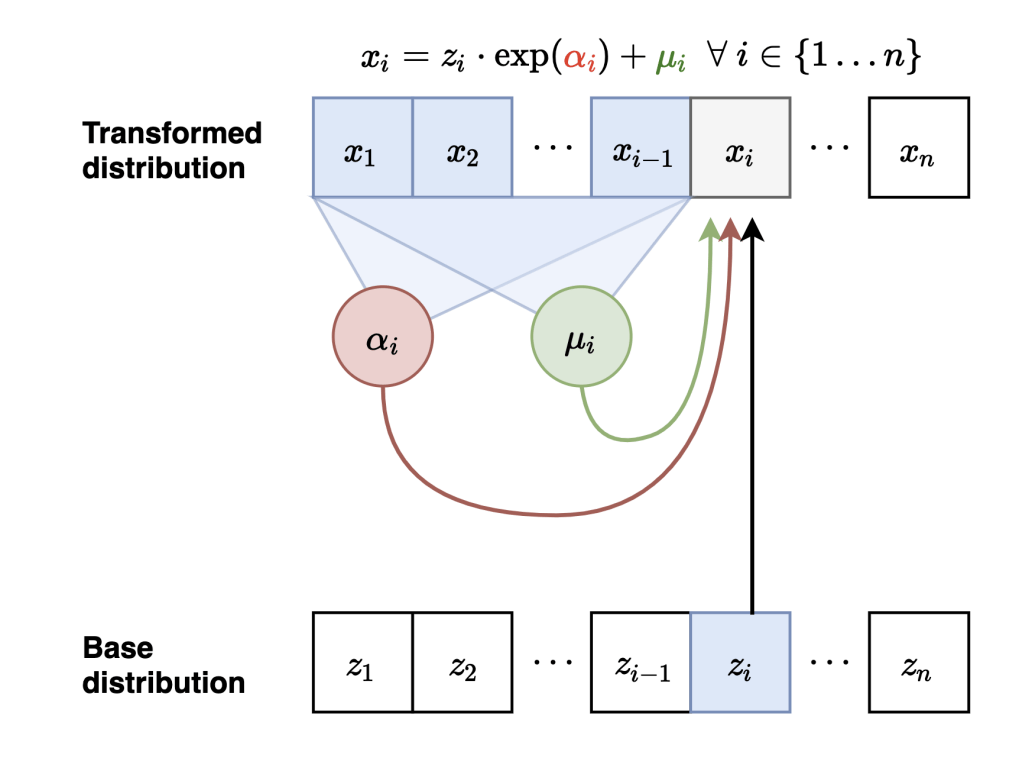

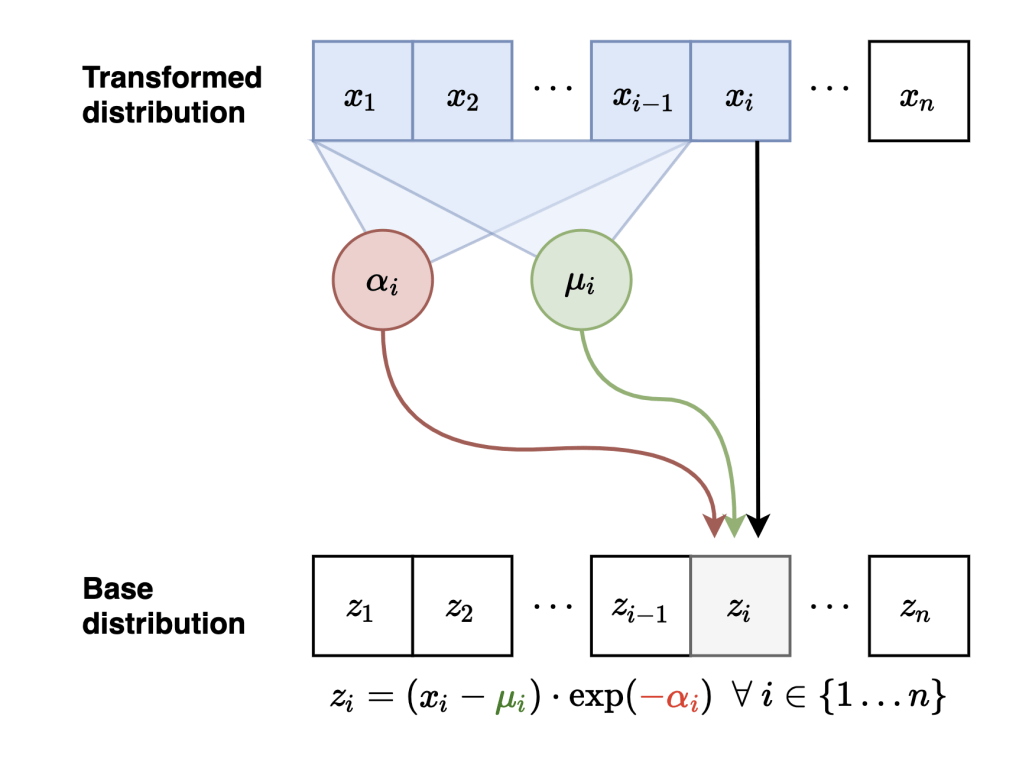

Masked Autoregressive Flows

Neural Network

Sample

Evaluate probabilities

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

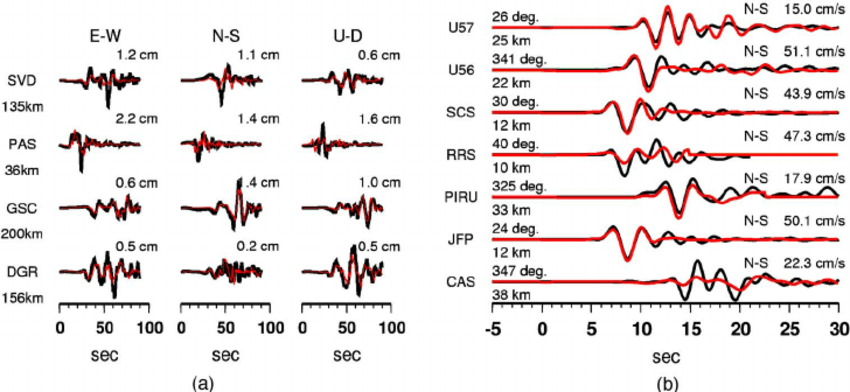

Forward Model

Observable

Dark matter

Dark energy

Inflation

Predict

Infer

Parameters

Inverse mapping

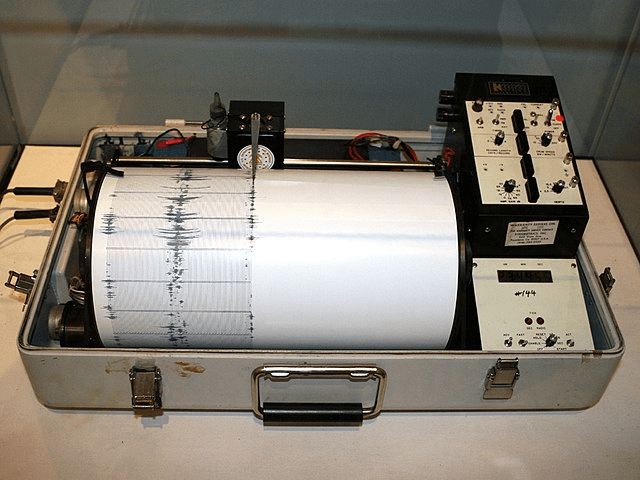

Fault line stress

Plate velocity

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Simulation-based Inference

Normalizing flow

In continuous time

Continuity Equation

[Image Credit: "Understanding Deep Learning" Simon J.D. Prince]

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Chen et al. (2018), Grathwohl et al. (2018)

Generate

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Evaluate Probability

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Loss requires solving an ODE!

Diffusion, Flow matching, Interpolants... All ways to avoid this at training time

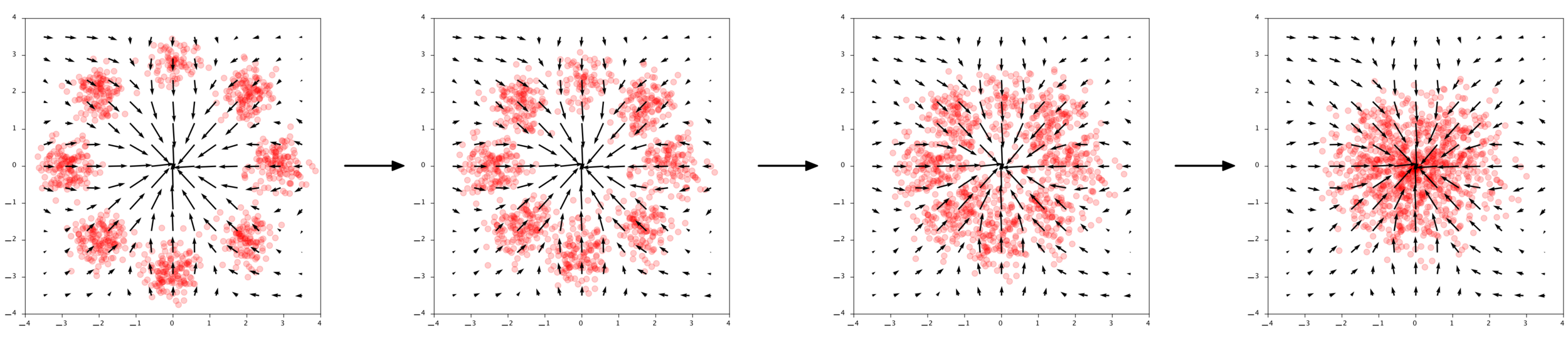

Conditional Flow matching

Assume a conditional vector field (known at training time)

The loss that we can compute

The gradients of the losses are the same!

["Flow Matching for Generative Modeling" Lipman et al]

["Stochastic Interpolants: A Unifying framework for Flows and Diffusions" Albergo et al]

Intractable

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Flow Matching

Continuity equation

[Image Credit: "Understanding Deep Learning" Simon J.D. Prince]

Sample

Evaluate probabilities

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

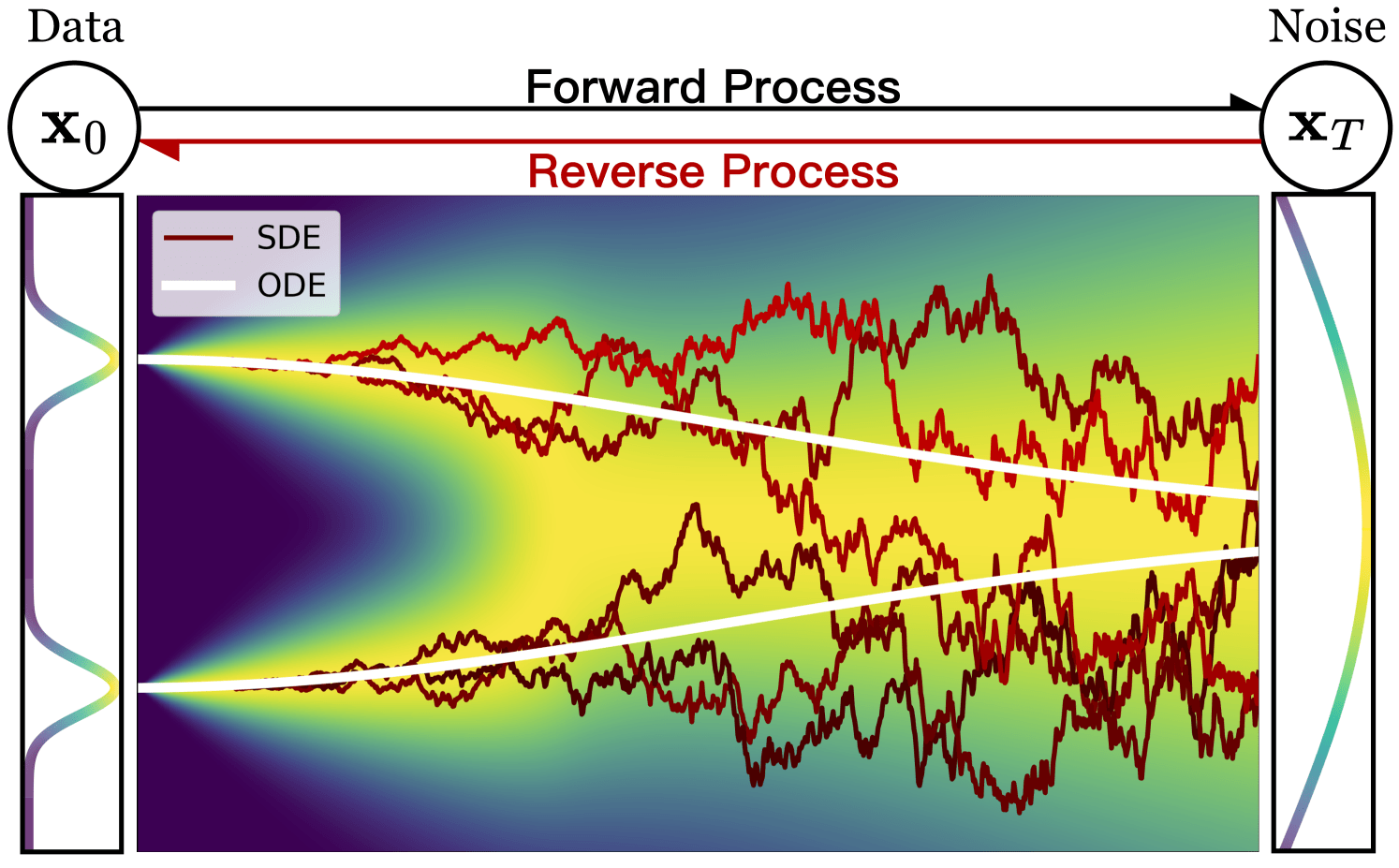

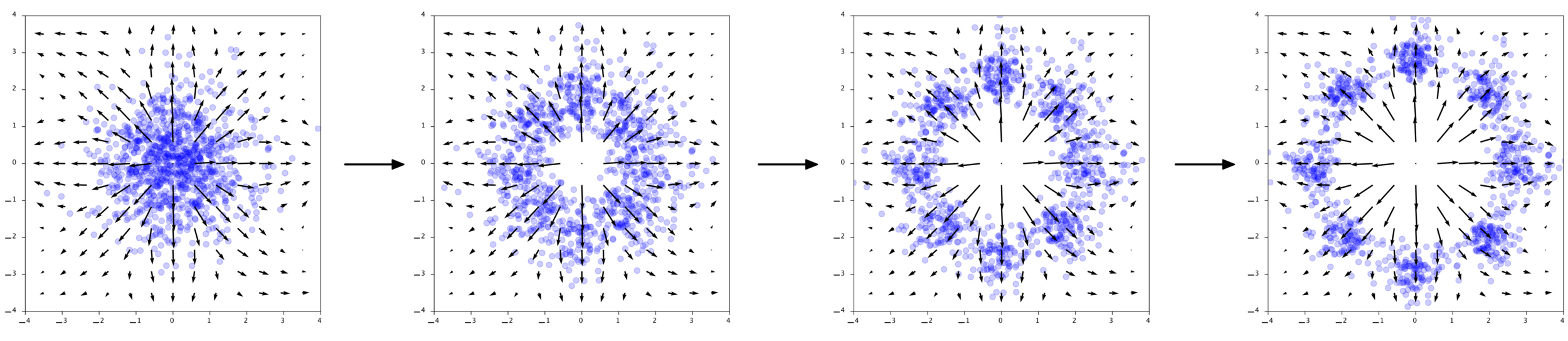

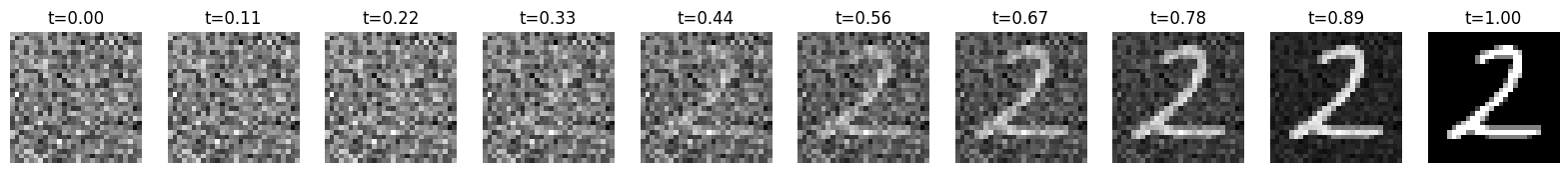

Diffusion Models

Reverse diffusion: Denoise previous step

Forward diffusion: Add Gaussian noise (fixed)

Prompt

A person half Yoda half Gandalf

Denoising = Regression

Fixed base distribution:

Gaussian

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

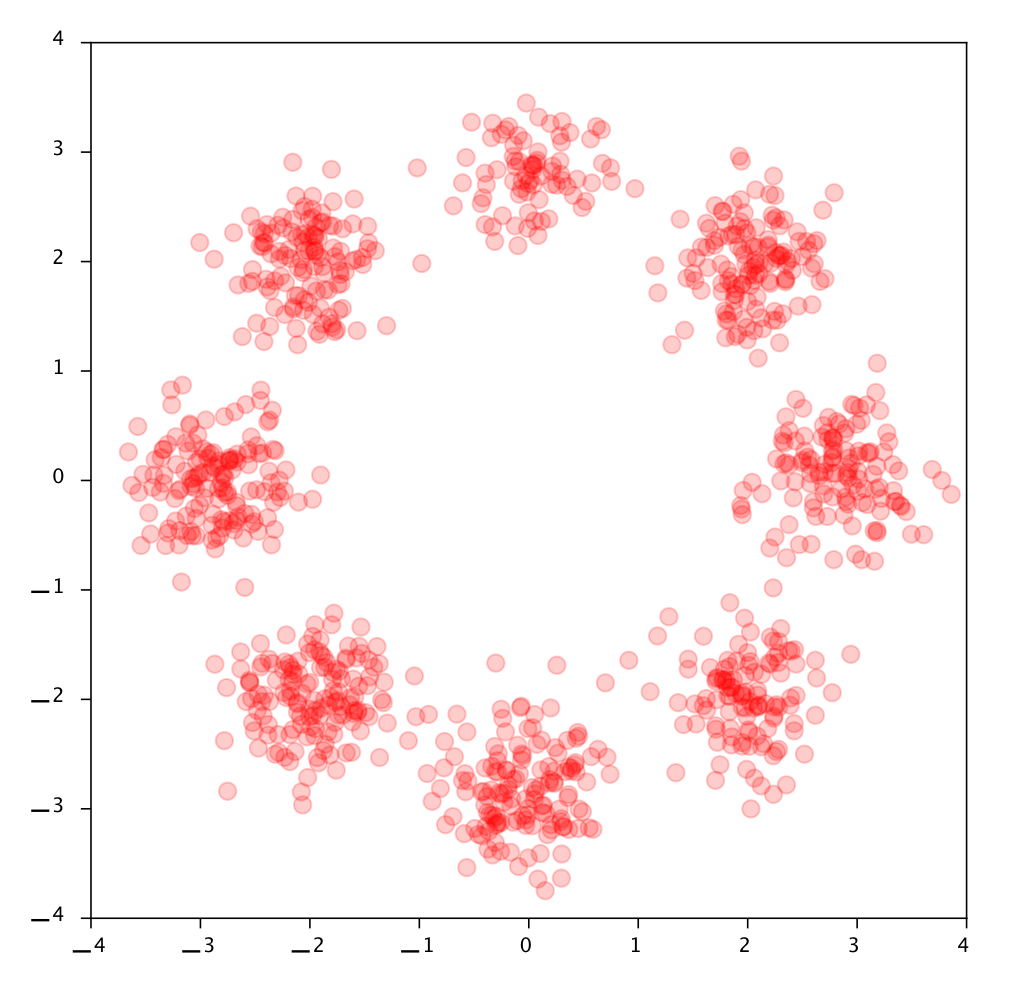

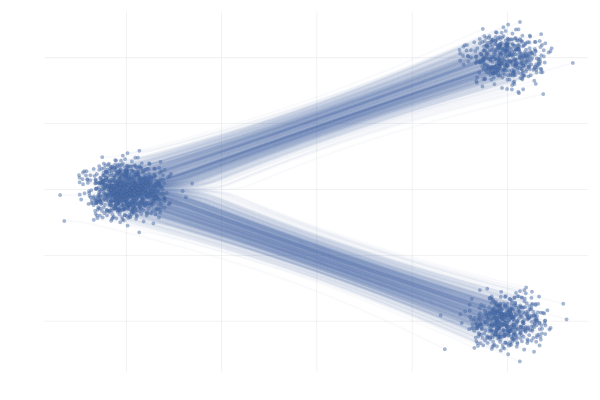

["A point cloud approach to generative modeling for galaxy surveys at the field level"

Cuesta-Lazaro and Mishra-Sharma

International Conference on Machine Learning ICML AI4Astro 2023, Spotlight talk, arXiv:2311.17141]

Base Distribution

Target Distribution

Simulated Galaxy 3d Map

Prompt:

Prompt: A person half Yoda half Gandalf

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

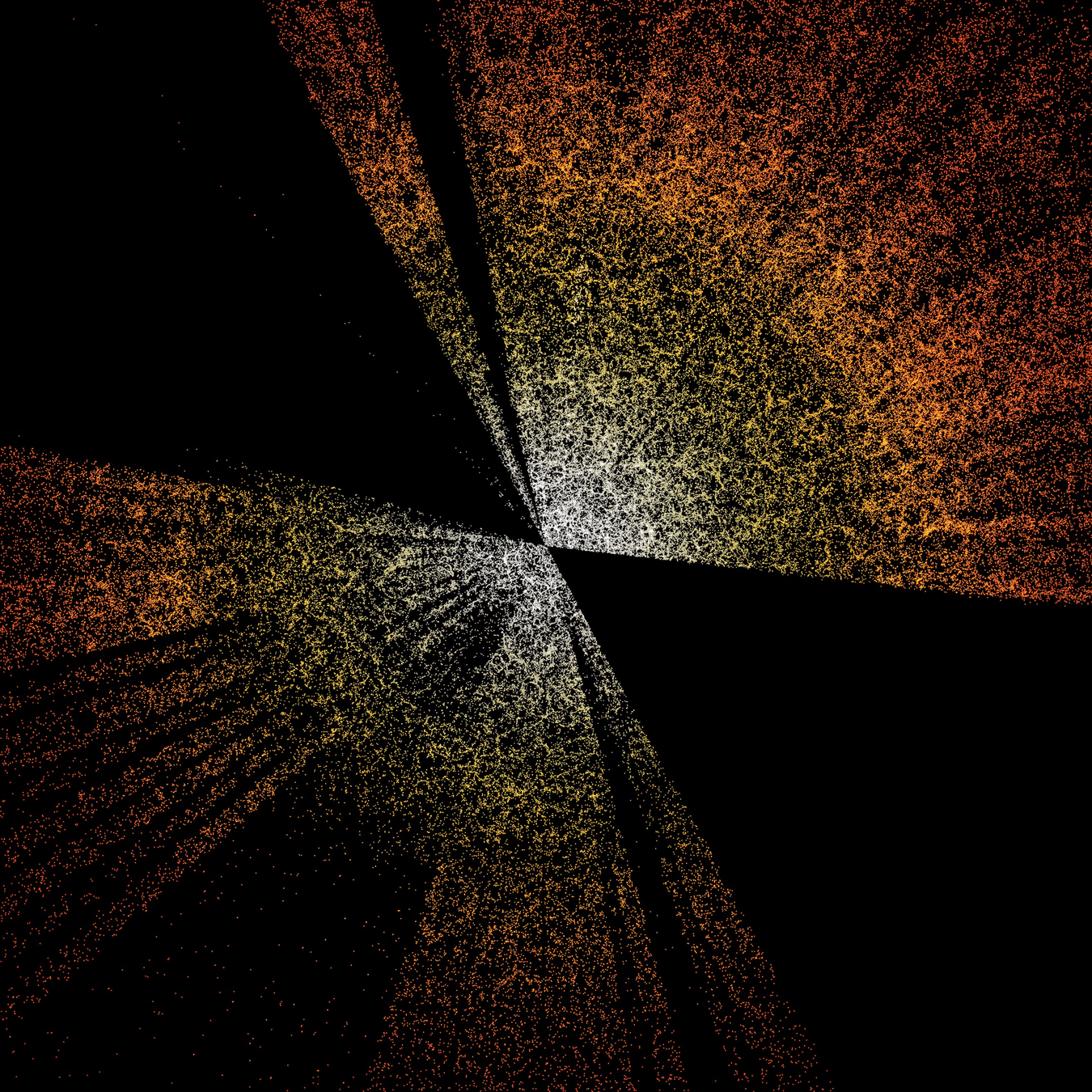

Autoregressive in Frequency

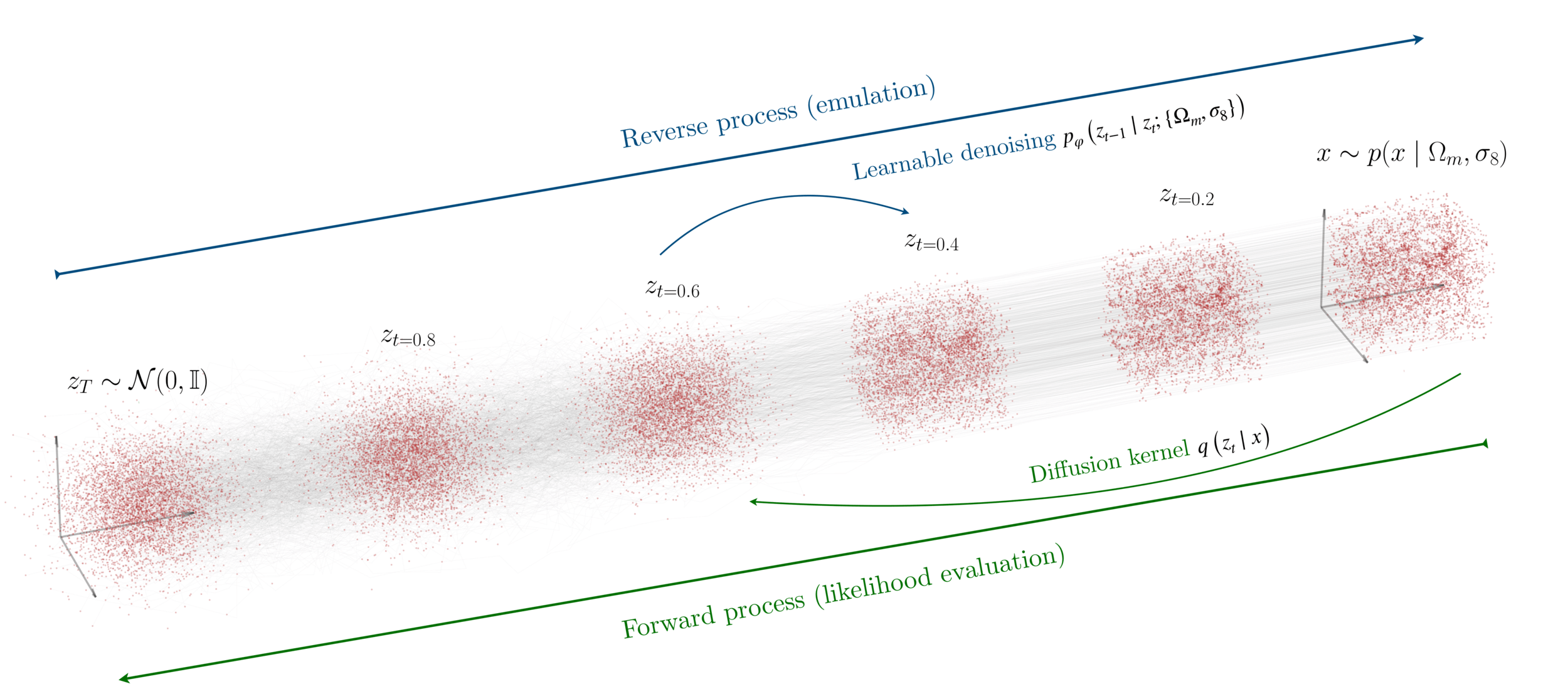

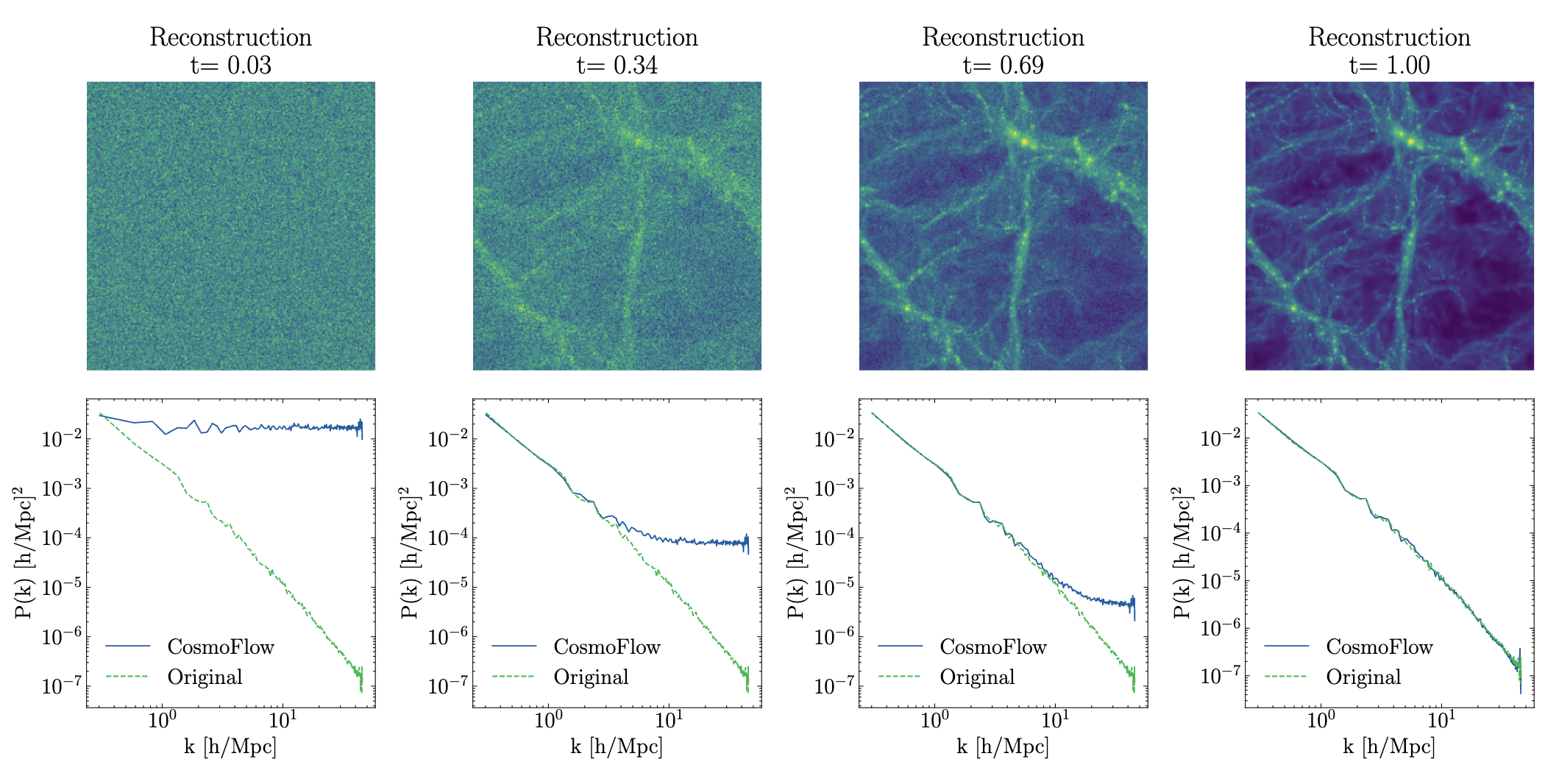

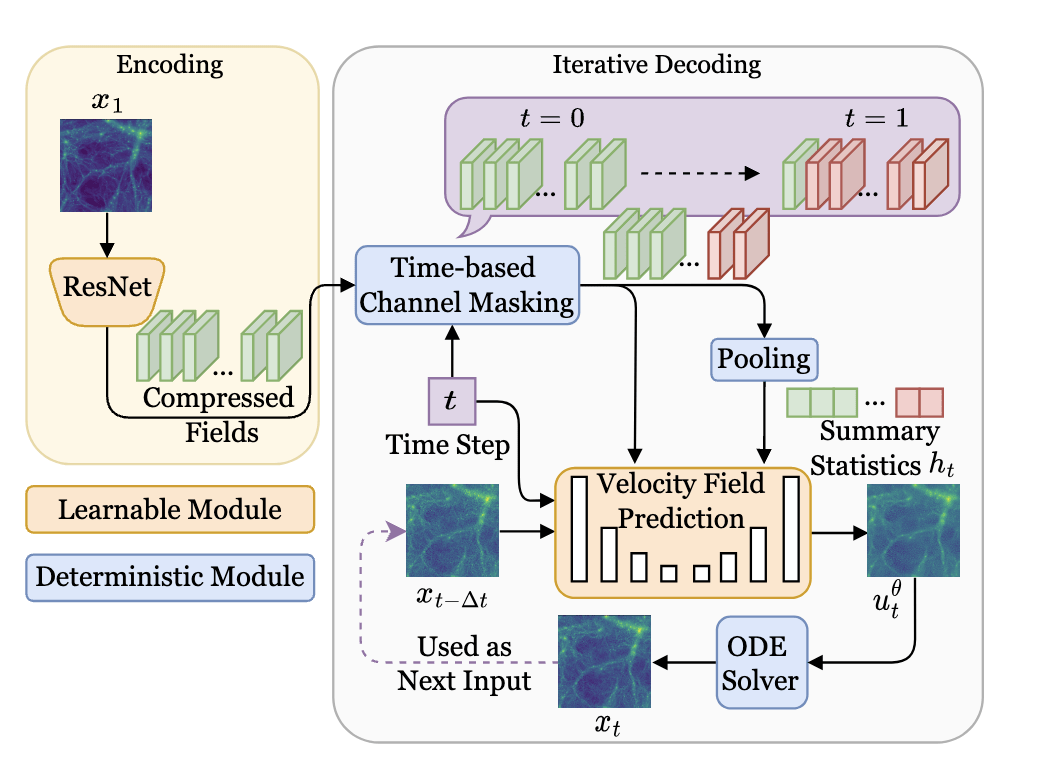

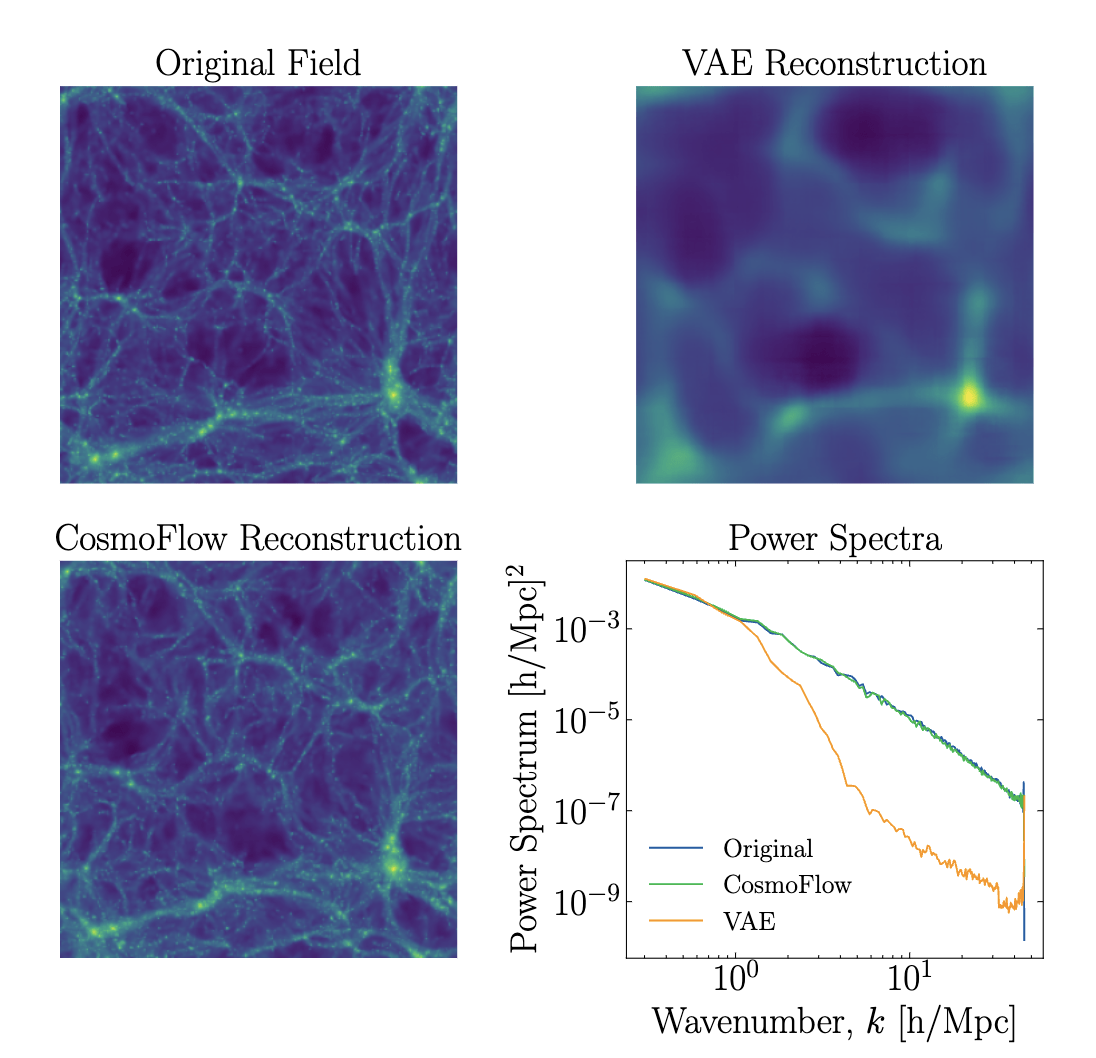

["CosmoFlow: Scale-Aware Representation Learning for Cosmology with Flow Matching" Kannan, Qiu, Cuesta-Lazaro, Jeong (in prep)]

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

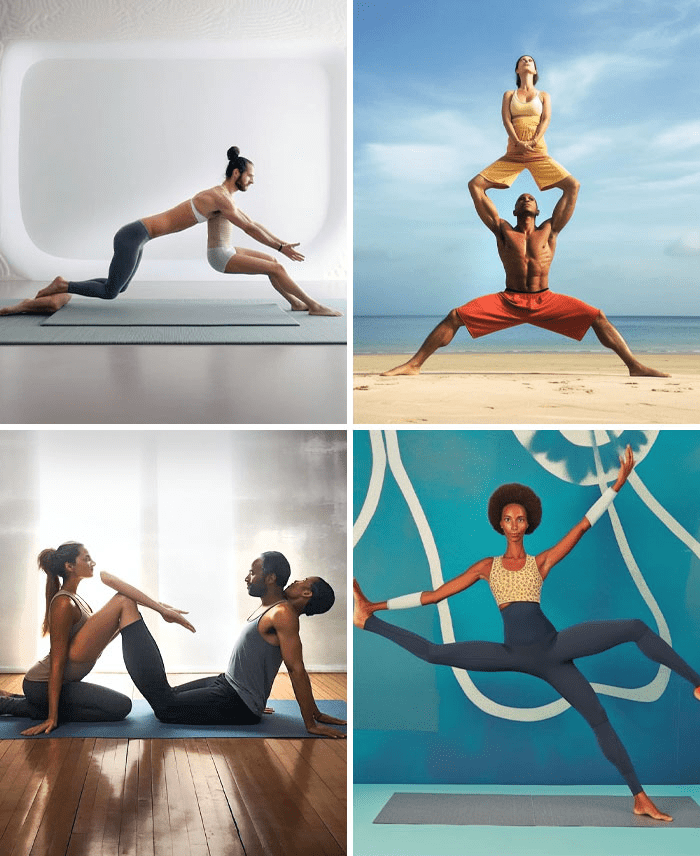

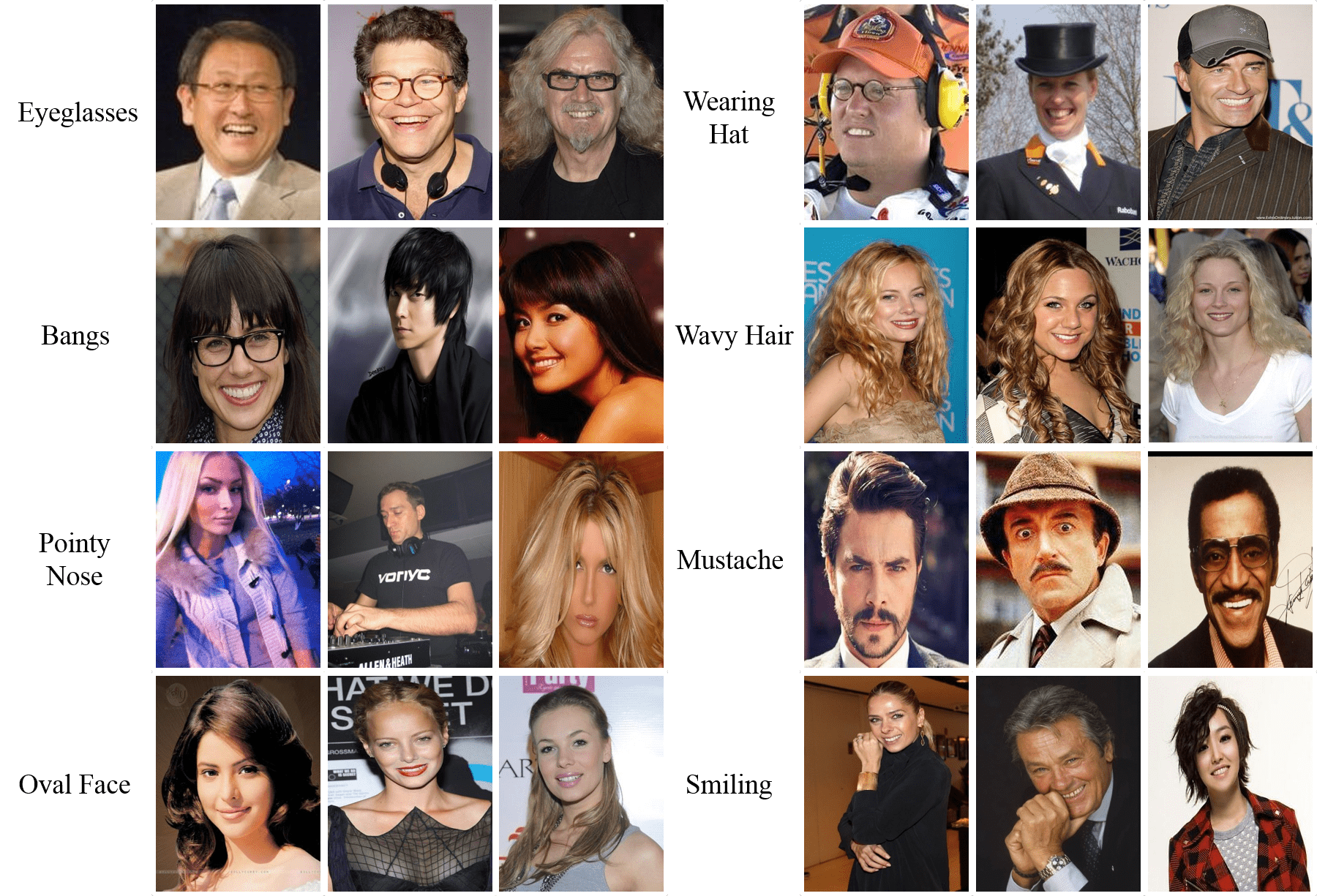

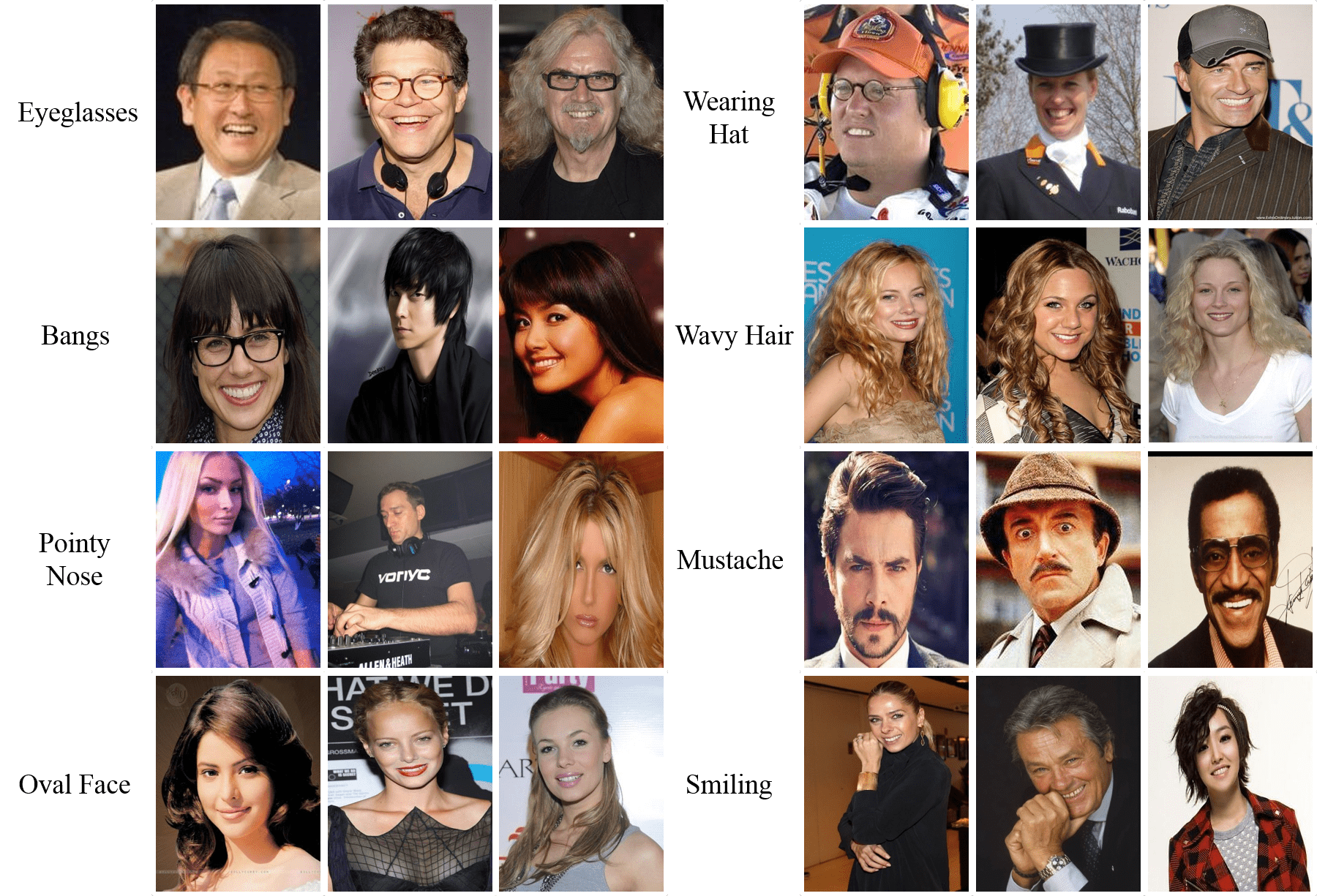

Real or Fake?

How good is my generative model?

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

["A Practical Guide to Sample-based Statistical Distances for Evaluating Generative Models in Science" Bischoff et al 2024

arXiv:2403.12636]

Mean relative velocity

k Nearest neighbours

Pair separation

Pair separation

Varying cosmological parameters

Physics as a testing ground: Well-understood summary statistics enable rigorous validation of generative models

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Reproducing summary statistics

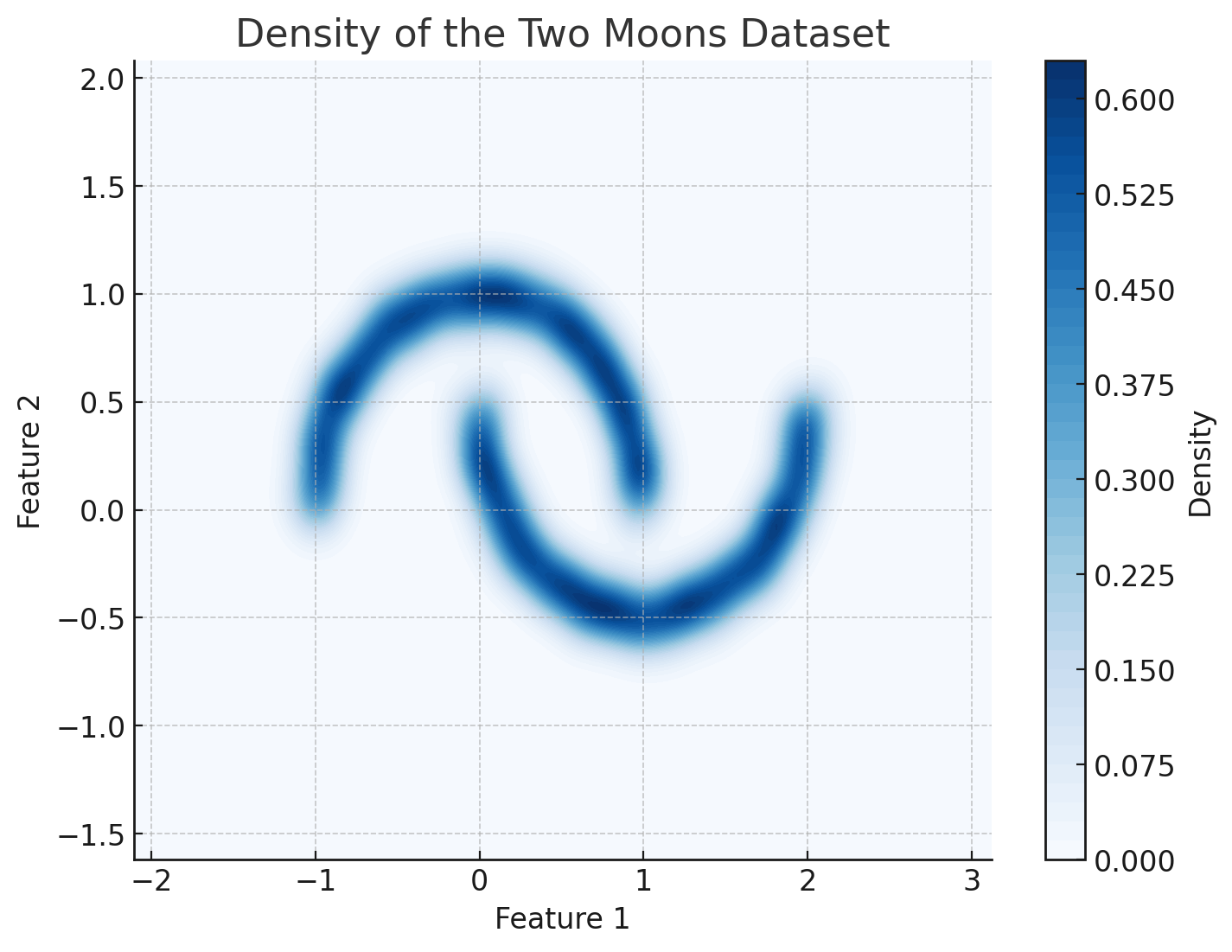

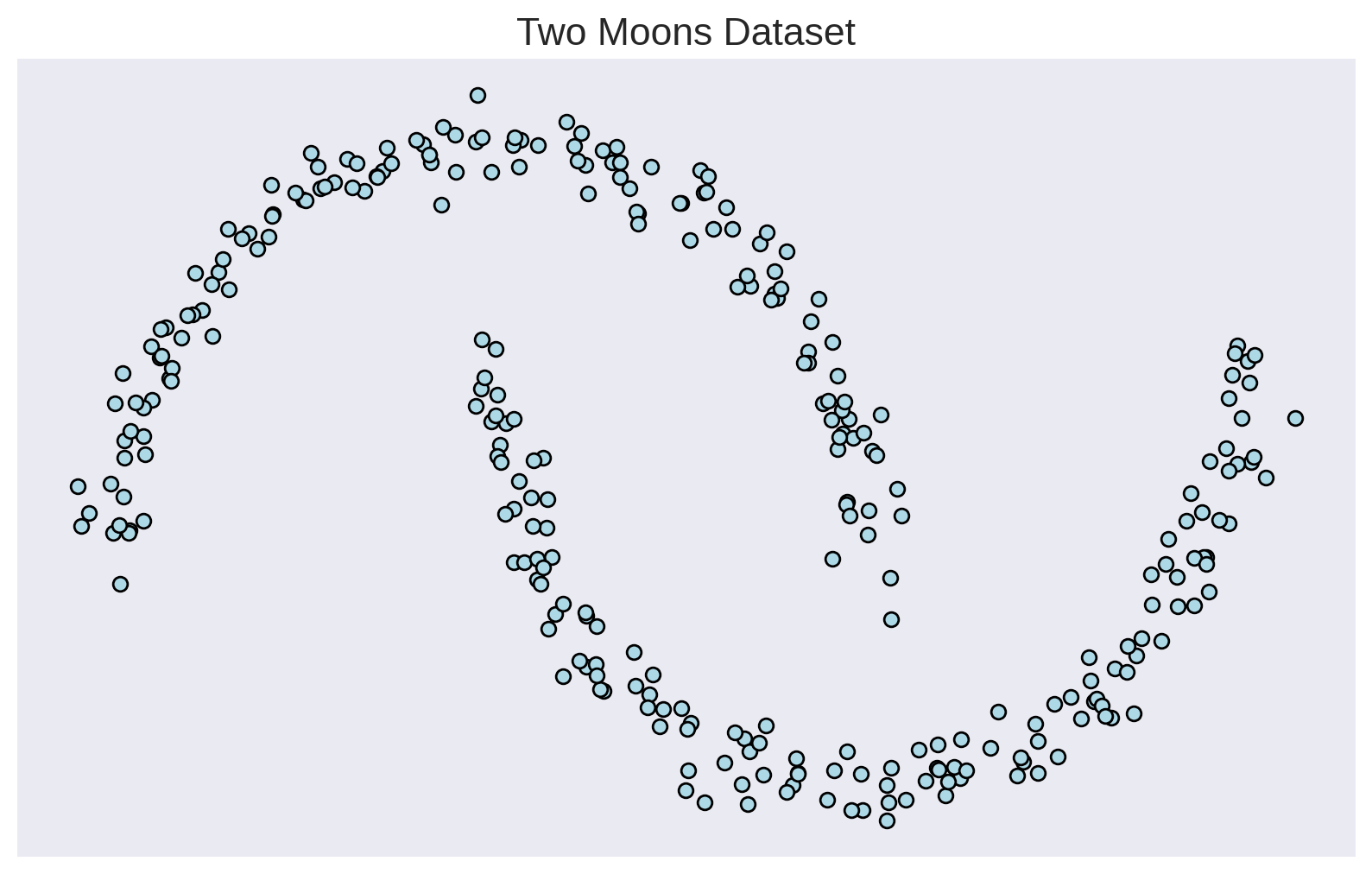

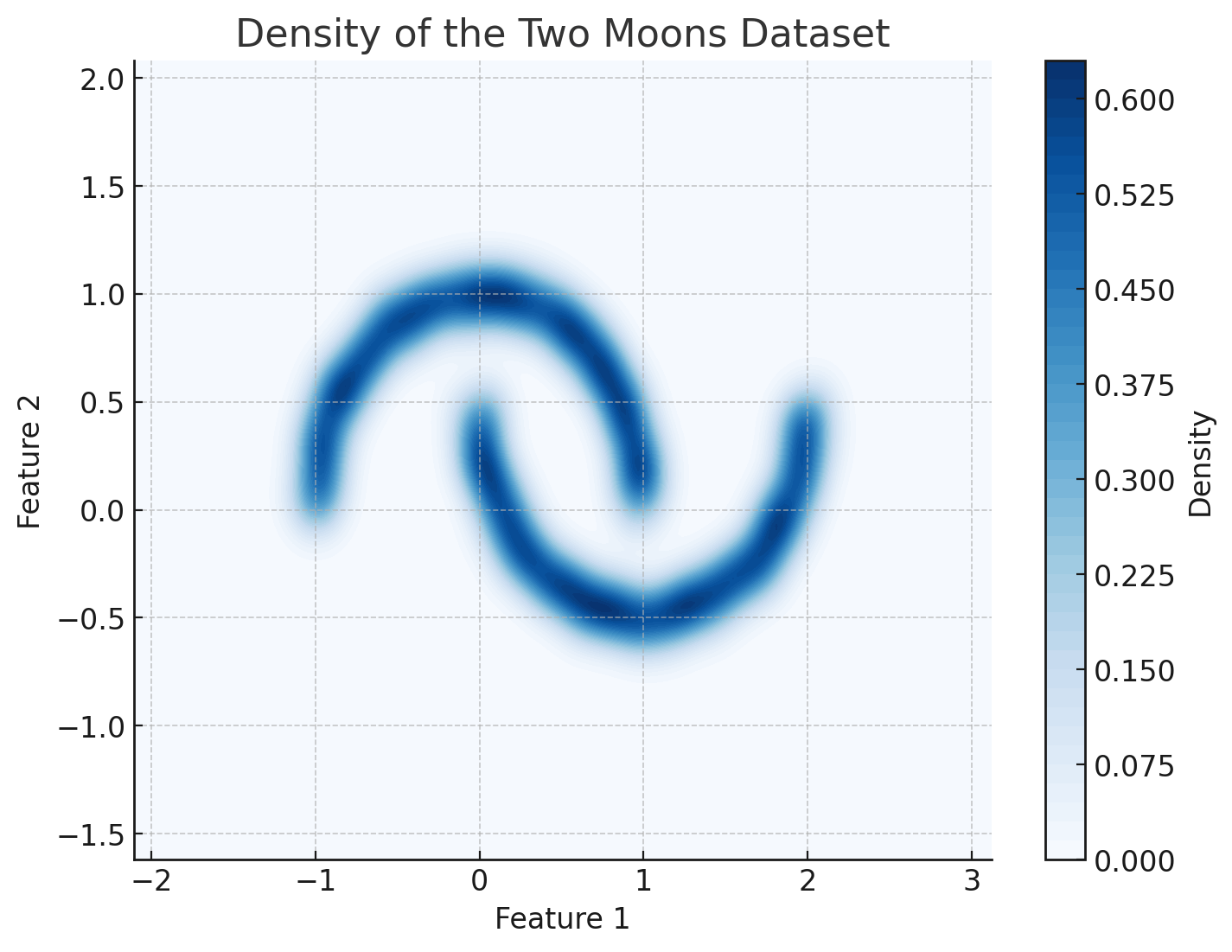

Has my model learned the underlying density?

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

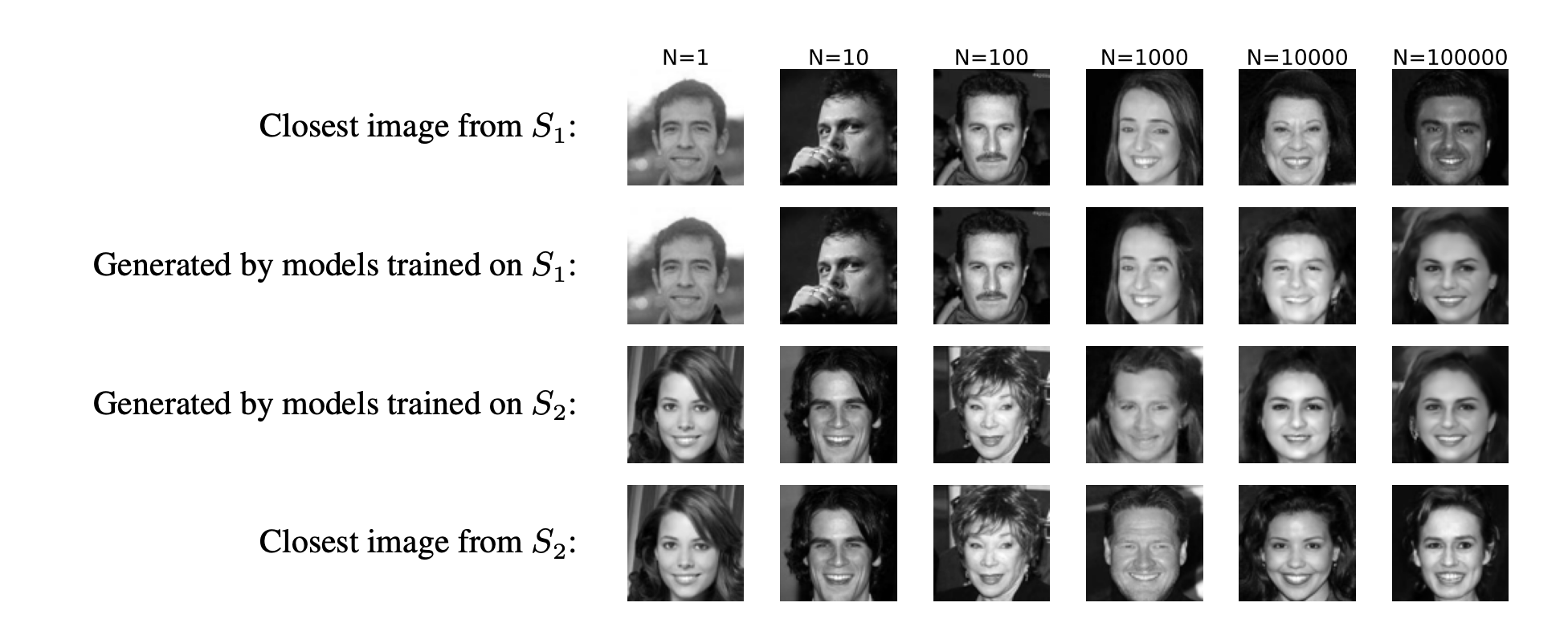

["Generalization in diffusion models arises from geometry-adaptive harmonic representations" Kadkhodaie et al (2024)]Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

Why and How do generative models work?

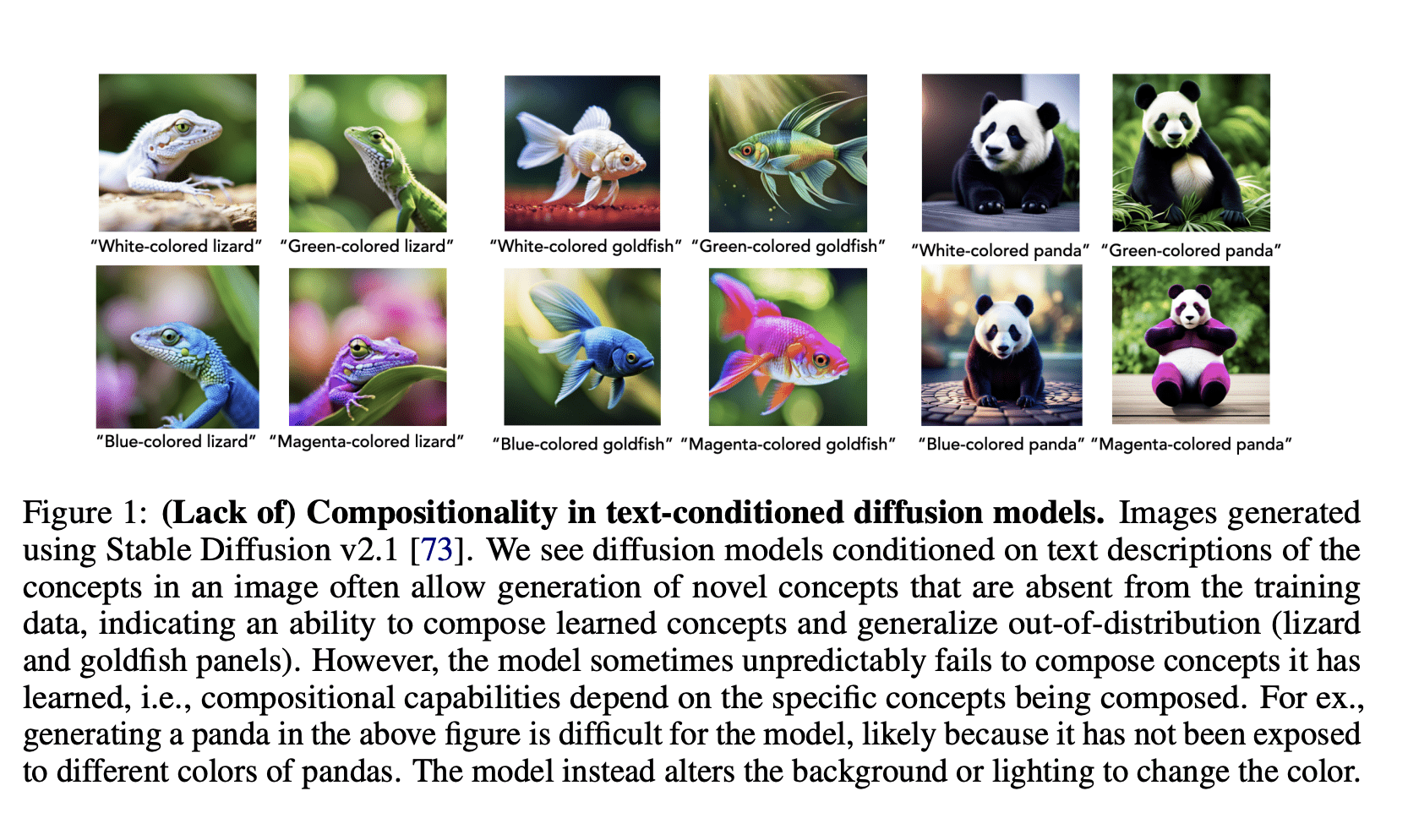

["Compositional Abilities Emerge Multiplicatively: Exploring Diffusion Models In a Synthetic Task" Okawa et al (2024)]["An analytical theory of creativity in convolutional diffusion models" Kamb et al (2025)]Generate = Understand?

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

["CosmoFlow: Scale-Aware Representation Learning for Cosmology with Flow Matching" Kannan, Qiu, Cuesta-Lazaro, Jeong (in prep)]

Generate = Understand?

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

["CosmoFlow: Scale-Aware Representation Learning for Cosmology with Flow Matching" Kannan, Qiu, Cuesta-Lazaro, Jeong (in prep)]

Tutorial

Gaussian

MNIST

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

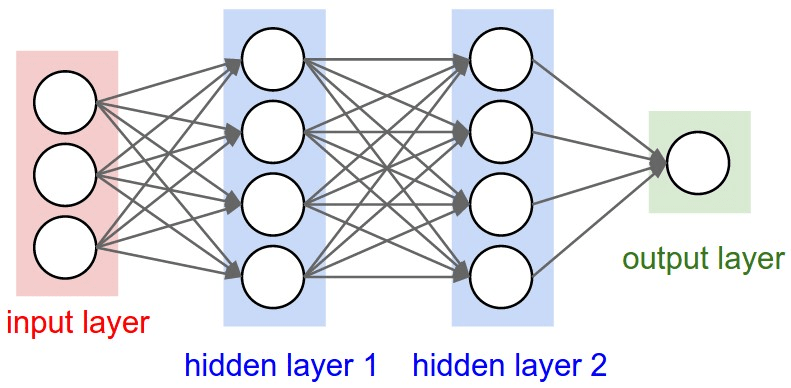

import flax.linen as nn

class MLP(nn.Module):

@nn.compact

def __call__(self, x):

# Linear

x = nn.Dense(features=64)(x)

# Non-linearity

x = nn.silu(x)

# Linear

x = nn.Dense(features=64)(x)

# Non-linearity

x = nn.silu(x)

# Linear

x = nn.Dense(features=2)(x)

return x

model = MLP()Jax Models

import jax.numpy as jnp

example_input = jnp.ones((1,4))

params = model.init(jax.random.PRNGKey(0), example_input) y = model.apply(params, example_input)Architecture

Parameters

Call

Bridging two distributions

Base

Data

How is the bridge constrained?

Normalizing flows: Reverse = Forward inverse

Diffusion: Forward = Gaussian noising

Flow Matching: Forward = Interpolant

is p(x0) restricted?

Diffusion: p(x0) is Gaussian

Normalising flows: p(x0) can be evaluated

Is bridge stochastic (SDE) or deterministic (ODE)?

Diffusion: Stochastic (SDE)

Normalising flows: Deterministic (ODE)

(Exact likelihood evaluation)

-

Books by Kevin P. Murphy

- Machine learning, a probabilistic perspective

- Probabilistic Machine Learning: advanced topics

- ML4Astro workshop https://ml4astro.github.io/icml2023/

- ProbAI summer school https://github.com/probabilisticai/probai-2023

- IAIFI Summer school

- Blogposts

Carolina Cuesta-Lazaro IAIFI/MIT - From Zero to Generative

References

cuestalz@mit.edu

From zero to generative - MIT/CfA Summer students - 2025

By carol cuesta

From zero to generative - MIT/CfA Summer students - 2025

- 522